In the chapter “Beyond this horizon,” Sean Carroll discusses two related problems involving properties of empty space. Before discussing the vacuum energy problem, he profiles the so-called hierarchy problem in the cosmic energy scale. It’s about the effects of virtual particles.

The energy scale that characterizes the weak interactions (the Higgs field value, 246 GeV) and the one that characterizes gravity (the Planck scale, 10^18 GeV) are extremely different numbers; that’s the hierarchy we’re referring to. This would be weird enough on its own, but we need to remember that quantum-mechanical effects of virtual particles want to drive the weak scale up to the Planck scale. — Carroll, Sean (2012-11-13). The Particle at the End of the Universe: How the Hunt for the Higgs Boson Leads Us to the Edge of a New World (Kindle Locations 3585-3588). Penguin Publishing Group. Kindle Edition.

To specify a theory like the Standard Model, you have to give a list of the fields involved (quarks, leptons, gauge bosons, Higgs), but also the values of the various numbers that serve as parameters of the theory. … Essentially, we need to add up different contributions from various kinds of virtual particles to get the final answer. … If we measure a parameter to be much smaller than we expect, we declare there is a fine-tuning problem, and we say that the theory is “unnatural.” … For the most part, the parameters of the Standard Model are pretty natural. There are two glaring exceptions: the value of the Higgs field in empty space, and the energy density of empty space, also known as the “vacuum energy.” Both are much smaller than they have any right to be. … This giant difference between the expected value of the Higgs field in empty space and its observed value is known as the “hierarchy problem.” — Ibid (Kindle Locations 3557-3585).

Wiki describes the problem this way:

A hierarchy problem occurs when the fundamental value of some physical parameter, such as a coupling constant or a mass, in some Lagrangian is vastly different from its effective value, which is the value that gets measured in an experiment. This happens because the effective value is related to the fundamental value by a prescription known as renormalization, which applies corrections to it. Typically the renormalized value of parameters are close to their fundamental values, but in some cases, it appears that there has been a delicate cancellation between the fundamental quantity and the quantum corrections. Hierarchy problems are related to fine-tuning problems and problems of naturalness.

In particle physics, the most important hierarchy problem is the question that asks why the weak force is 10^24 times as strong as gravity. … More technically, the question is why the Higgs boson is so much lighter than the Planck mass (or the grand unification energy, or a heavy neutrino mass scale) … In a sense, the problem amounts to the worry that a future theory of fundamental particles, in which the Higgs boson mass will be calculable, should not have excessive fine-tunings.

A fascinating situation — the need to essentially “hand code” (manually tune) values of (input) parameters in mathematical models in order to obtain practical solutions. [1] The problem arises from the use of perturbation theory and renormalization.

Wiki:

Renormalization is a collection of techniques in quantum field theory, the statistical mechanics of fields, and the theory of self-similar geometric structures, that are used to treat infinities arising in calculated quantities by altering values of quantities to compensate for effects of their self-interactions.

For example, a theory of the electron may begin by postulating a mass and charge. However, in quantum field theory this electron is surrounded by a cloud of possibilities of other virtual particles such as photons, which interact with the original electron. Taking these interactions into account shows that the electron-system in fact behaves as if it had a different mass and charge. Renormalization replaces the originally postulated mass and charge with new numbers such that the observed mass and charge matches those originally postulated.

Renormalization specifies relationships between parameters in the theory when the parameters describing large distance scales differ from the parameters describing small distances. Physically, the pileup of contributions from an infinity of scales involved in a problem may then result in infinities. … Renormalization procedures are based on the requirement that certain physical quantities are equal to the observed values.

Today, the point of view has shifted: on the basis of the breakthrough renormalization group insights of Nikolay Bogolyubov and Kenneth Wilson, the focus is on variation of physical quantities across contiguous scales, while distant scales are related to each other through “effective” descriptions. All scales are linked in a broadly systematic way, and the actual physics pertinent to each is extracted with the suitable specific computational techniques appropriate for each. Wilson clarified which variables of a system are crucial and which are redundant. [Cf. Sean Carroll’s perspective.]

When developing quantum electrodynamics in the 1930s, Max Born, Werner Heisenberg, Pascual Jordan, and Paul Dirac discovered that in perturbative corrections many integrals were divergent.

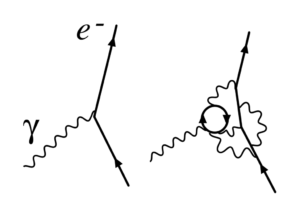

One way of describing the perturbation theory corrections’ divergences was discovered in the 1946–49 by Hans Kramers, Julian Schwinger, Richard Feynman, and Shin’ichiro Tomonaga, and systematized by Freeman Dyson in 1949. The divergences appear in radiative corrections involving Feynman diagrams [see Figure 1 in article] with closed loops of virtual particles in them. [Which Lederman discusses in his book — see Note 3.]

So, I’m more attentive to terms like coupling (coupling strength) and binding, as well as remarks about complex dynamics of valence and virtual particles. There’s another hill to climb: quantum corrections.

Wiki:

In quantum mechanics, perturbation theory is a set of approximation schemes directly related to mathematical perturbation for describing a complicated quantum system in terms of a simpler one. The idea is to start with a simple system for which a mathematical solution is known, and add an additional “perturbing” Hamiltonian representing a weak disturbance to the system. If the disturbance is not too large, the various physical quantities associated with the perturbed system (e.g. its energy levels and eigenstates) can be expressed as “corrections” to those of the simple system. These corrections, being small compared to the size of the quantities themselves, can be calculated using approximate methods such as asymptotic series. The complicated system can therefore be studied based on knowledge of the simpler one.

[1] There is a similar need in many types of engineering problems — specifying boundary conditions (constraints) and properties of materials for each context.

[2] Wiki

Perturbation theory is an important tool for describing real quantum systems, as it turns out to be very difficult to find exact solutions to the Schrödinger equation for Hamiltonians of even moderate complexity. The Hamiltonians to which we know exact solutions, such as the hydrogen atom, the quantum harmonic oscillator and the particle in a box, are too idealized to adequately describe most systems. Using perturbation theory, we can use the known solutions of these simple Hamiltonians to generate solutions for a range of more complicated systems.

The expressions produced by perturbation theory are not exact, but they can lead to accurate results as long as the expansion parameter, say α, is very small. Typically, the results are expressed in terms of finite power series in α that seem to converge to the exact values when summed to higher order. After a certain order n ~ 1/α however, the results become increasingly worse since the series are usually divergent (being asymptotic series).

In the theory of quantum electrodynamics (QED), in which the electron–photon interaction is treated perturbatively, the calculation of the electron’s magnetic moment has been found to agree with experiment to eleven decimal places. In QED and other quantum field theories, special calculation techniques known as Feynman diagrams are used to systematically sum the power series terms.

[3] Lederman, Leon M.; Hill, Christopher T. (2011-11-29). Symmetry and the Beautiful Universe. Prometheus Books. Kindle Edition.

The real power of Feynman diagrams is that we can systematically compute physical processes in relativistic quantum theories to a high degree of precision. This comes from what we call the quantum corrections, or the so-called higher-order processes. In figure 29, we show the second-order quantum corrections to the scattering problem of two electrons. This is a set of diagrams, each of which must be computed in detail and then added together including the previous diagram in figure 27, to get the final total result for the T-matrix. (The T-matrix, as noted above, is essentially the quantum version of the potential energy between the electrons and describes the scattering process.) This gives the total T-matrix to a precision of about 1/ 10,000. We can then go to the third order of higher quantum corrections to try to get even more precise agreement with experiment. Third-order calculations, however, are extremely difficult and very tiring for theoretical physicists. — Ibid (p. 252).

In the second-order processes of figure 29 we now see the appearance of the “loop diagrams.” The first diagram contains a loop representing a particle and antiparticle being spontaneously produced and then reannihilating. They contain a looping flow of the particle’s momentum and energy. Here we must sum up all possible momenta and energies that can occur in the loops, such that the overall incoming and outgoing energy and momentum is conserved. The Feynman loops present us with a new problem that has bothered physicists in many different ways for many years: put simply, when we compute the loop sums for certain loop diagrams, we get infinity! The processes we compute seem to become nonsensical. The theory seems to crash and burn. However, as the loop momenta become larger and larger, the loop is physically occupying a smaller and smaller volume of space and time, by the quantum inverse relationship between wavelength (size) and momentum. So in fact, we can only sum up the loop momenta to some large scale, or correspondingly, down to some small distance scale of space, for which we still trust the structure of our theory. … Interpreted properly, the loop diagrams actually tell us how to examine the physics at different distance scales, as though we have a theoretical microscope with a variable magnification power. — Ibid (pp. 253-254). [Cf. Carroll’s and Wilson’s use of effective theory.]

Physics uses many of the same problem decomposition and analysis methods as in other technical disciplines. For example, perturbation theory is used in many types of engineering. A friend is a structural / civil engineer. He uses a computer program called Femap for structural design and analysis. Femap — Finite Element Modeling And Postprocessing — uses finite element models “to virtually model components, assemblies, or systems to determine behavior under a given set of boundary conditions.”

One of the challenges in designing modern structures (e.g., spacecraft, aircraft, skyscrapers) is minimizing weight — optimizing performance while reducing weight. As in physics, simpler systems — well understood and behaved solutions (e.g., beams) — are used to analyze more complicated ones. Breaking a problem into smaller ones is another common strategy.

The finite element method “uses variational methods from the calculus of variations to approximate a solution by minimizing an associated error function.” The Wiki article for calculus of variations contains an example for the wave equation and action principle, as well as a “See also” entry for perturbation methods.

In structural mechanics finite element analysis is used for modal analysis (as in physics) — to determine the natural frequencies and mode shapes of eigensystems.

Here’s the connection to the hierarchy problem in physics. One of the challenges is avoiding GIGO (garbage in, garbage out). So, engineering analysis may involve actually exciting a structure — applying an impulse load or shock — and measuring the response in order to calibrate the model. This action can be as simple as hitting the object with a hammer and measuring vibrations.

My friend tells me stories about novice engineers modeling a design for structural analysis. They may be well-versed in a CAD tool and how to process that in FEMAP with NX NASTRAN, but their finite element analysis fails during physical tests. They import a design from a CAD program (e.g., CATIA or AutoCAD) and make a mesh model of everything, which typically uses solid elements which are not accurate unless the mesh is really fine. Such fine meshes can create large models which are difficult to troubleshoot.

Choosing the correct type of finite element also is critical in modeling structural effects. Blanket meshing typically uses solid or plate elements for all structures, including structures that are actually beams. For those structures (beam structures) beam elements (CBAR, CBEAM, CROD) should be used. Because of its formulation, a beam element gives exact results, whereas plate elements only give approximate results. Also the joints can be more accurately modeled when using beam elements.

Fermilab’s “Doc Don” presents a general framework for perturbation theory in this YouTube 8.5 minute video “Theoretical physics: insider’s tricks.”

Published on Mar 24, 2016

Theoretical particle physics employs very difficult mathematics, so difficult in fact that it is impossible to solve the equations. In order to make progress, scientists employ a mathematical technique called perturbation theory. This method makes it possible to solve very difficult problems with very good precision. In this video, Fermilab’s Dr. Don Lincoln shows just how easy it is to understand this powerful technique.

This seminar discusses the challenge of solving quantum mechanical equations:

Garnet Chan, Bren Professor of Chemistry, Chemistry and Chemical Engineering

SIMULATING THE QUANTUM WORLD ON A CLASSICAL COMPUTER

Quantum mechanics is the fundamental theory underlying all of chemistry, materials science, and the biological world, yet solving the equations appears to be an exponentially hard problem. Can we simulate the quantum world using classical computers? I will discuss why simulating quantum mechanics is not as hard as it first appears and give some examples of how modern-day quantum-mechanical calculations are changing our understanding of practical chemistry and materials science. — Caltech | Alumni Reunion Weekend 2017 (80th Annual Seminar Day) Program

Chad Orzel’s article “In Physics, Infinity Is Easy But Ten Is Hard” (March 14, 2017) discusses mathematical approximations (smoothing by abstraction) in physics: “This all comes back to my half-joking description of physics as the science of knowing when to approximate cows as spheres. We’re always looking for the simplest possible models of things, dealing with only the most essential features, and it turns out that an effectively infinite number of interacting objects can be abstracted away much more easily than you can handle a countable number of interacting objects.”

“The Hardest Thing To Grasp In Physics? Thinking Like A Physicist” (August 29, 2016): “… so what do I mean by, ‘Think like a physicist?‘ It’s something I’ve been intermittently talking about since I started blogging here at Forbes, a very particular approach to problem-solving that involves abstracting away a lot of complication to get to the simplest possible model that captures the essential elements of the problem. … This kind of simplified model-building isn’t completely unique to physics, but we seem to rely on it more heavily than other sciences. … This kind of quick-and-dirty mental modeling is efficient and also extremely versatile. When you find that the simplest approximation doesn’t quite match reality, it’s often very straightforward to make a small tweak that improves the prediction without needing to start over from scratch (moving from point cows to spherical cows, as it were). And you can iterate this process, slowly building up a more and more accurate model from lots of steps that are individually pretty simple. Of course, while it’s a powerful method for thinking about the world, it also has its pitfalls.”